LOL

So what? It’s absolutely true and makes absolutely no difference to anyone

I still prefer Andisearch over all others, it was rhe first AI search long before all other were released, with own LLM not a copy from others.

It really doesn’t matter which AI you prefer to use. You’re wrong for using AI period.

This is a garbage tier take. You use spell correction. Thats a form of computerized decision making, which is artificial intelligence. You MAY have a point if you’re referring to LLMs but thats incredibly arguable, and you hasn’t stated any reasoning behind your opinion.

Bullshit. You haven’t made any argument at all. You just supported a position without any backing behind it. So your take is garbage. I don’t use spell correction because I don’t need it, as I understand the language.

Use of ai is not immoral in and of itself. That argument is made in my response. I supported that argument by explaining why AI isn’t immoral, in that its a useful tool.

“I don’t use spell correction because I don’t need it, as I understand the language.”

Run-on sentence. You are using a comma as a seperator between two fully separate thoughts. Thats the wrong punctuation to accomplish the task you want.you should either use a semi-colon or a conjunction, or just make them separate sentences.

Your understanding of the language is clearly perfect and any tool that provides feedback when you make mistakes is clearly unnecessary.

Ok, so basically you’re saying you’re a grammar Nazi? Why? You still haven’t made any argument for your position. You’re just a dick. What a waste of time and data. Congrats for that, I guess?

My argument, which has ready been made twice, is computer decision making is not immoral. Thats ridiculous. Its incredibly useful in thousands of ways. All of this is AI.

You ACTUALLY make no argument. “AI is bad. If you use ai, you’re evil.”

That is not an argument, just an opinion, and has no support.

I’m using spellcheck as an example because its relevant.

If you continue to believe I’ve made no argument, well, I guess your reading compression isnt up to where it would need to be to understand basic human interaction.

You are of course welcome to have and express your opinion, but when its just a shit take and its unsupported dont get pissed about getting called out.

Again you fail to make any point or support any argument. Congratulations, you made words. But you haven’t even made it clear what side you’re on. You’re like a fucking late night ad show. You’re the human version of QVC. You haven’t even made it clear what your problem is with me, let alone my initial point. You can eat my ass or stfu. Up to you.

you’re wrong for using writing. Writing leads to laziness and forgetfulness. Future generations will hear much without being properly taught and will appear wise but not be so.

This is ALSO a garbage tier take. You have assumed your opponents opinion is based on AI making people dumb. You’re providing them with ammo. There are many possibilities for why they think this. As far as you know, your statement is entirely irrelevant to the person you’re responding too.

not my words, i was referring to Plato’s Phaedrus to make fun of the “ai bad” comment. I’m sorry. Forgive me.

Socrates tells a brief legend, critically commenting on the gift of writing from the Egyptian god Theuth to King Thamus, who was to disperse Theuth’s gifts to the people of Egypt. After Theuth remarks on his discovery of writing as a remedy for the memory, Thamus responds that its true effects are likely to be the opposite; it is a remedy for reminding, not remembering, he says, with the appearance but not the reality of wisdom. Future generations will hear much without being properly taught, and will appear wise but not be so, making them difficult to get along with.

No written instructions for an art can yield results clear or certain, Socrates states, but rather can only remind those that already know what writing is about. Furthermore, writings are silent; they cannot speak, answer questions, or come to their own defense.

Accordingly, the legitimate sister of this is, in fact, dialectic; it is the living, breathing discourse of one who knows, of which the written word can only be called an image. The one who knows uses the art of dialectic rather than writing:

“The dialectician chooses a proper soul and plants and sows within it discourse accompanied by knowledge—discourse capable of helping itself as well as the man who planted it, which is not barren but produces a seed from which more discourse grows in the character of others. Such discourse makes the seed forever immortal and renders the man who has it happy as any human being can be.”

OpenAI’s mission statement is also in their name. The fact that they have a proprietary product that is not open source is criminal and should be sued out of existence. They are now just like the Sun Micro after Apache was made open sourced; irrelevant they just haven’t gotten the memo yet. No company can compete against the whole world.

i agree FOSS is the way to go, and that OpenAI has a lot to answer for… but FOSS is not the only way to interpret “open”

the “open” was never intended as open source - it was open access. the idea was that anyone should have access to build things using AI; that it shouldn’t be for only megacorps who had the pockets to train… which they have, and still are doing

they also originally intended that all their research and patents would be open, which i believe they’re still doing

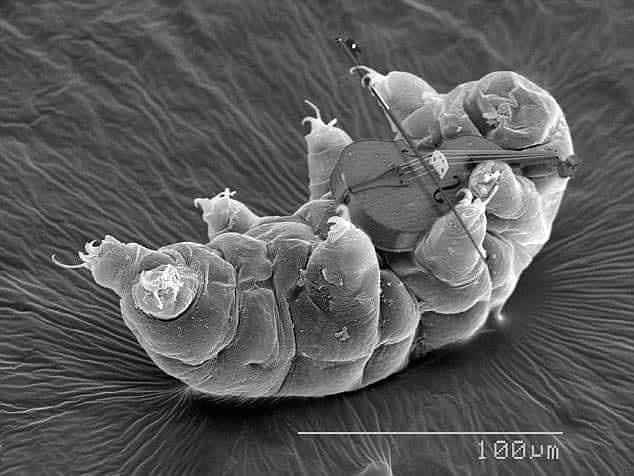

So the same people who have no problem about using other people’s copyrighted work, are now crying when the Chinese do the same to them? Find me a nano-scale violin so I can play a really sad song.

Planck could not scale small enough.

Can you put a liuqin in there?

Pedant time: That’s microscale not nanoscale.

You can shoot me now, it’s deserved.

That’s obviously a cello.

Maybe a cello if it was human grade. But that’s tardigrade.

You more elegantly said what I came to say.

I think chatgpt is more self aware than OpenAI is.

Good luck suing them

Copycat gets copycatted.

Womp womp. I’m sure openAI asked for permission from the creators for all its training data, right? Thief complains about someone else stealing their stolen goods, more at 11.

Oh are we supposed to care about substantial evidence of theft now? Because there’s a few artists, writers, and other creatives that would like to have a word with you…

Yes, so what?

https://stratechery.com/2025/deepseek-faq/

Who the fuck cares? They’re all doing this.

I couldn’t give less of a fuck.

They’re obviously trying so hard for regulatory capture in the states it’s embarrassing.

It’s only ok when we do it, cause we’re the good guys!

Cry me a fucking river, David.