Introduction

Why does Google insist on making it’s assistant situation so bad?

In theory, assistant should be the best it’s ever been. It’s better at “understanding” what I ask for, and yet it less capable than ever to do so.

This post is a rant about my experience using modern assistants on Android, and why, while I used to use these features actively in the mid-to-late-2010s, I now don’t even bother with them.

The task

Back in the late 2010s, I used to be able to hold the home button and ask the Google Assistant to create an event based on this email. It would grab the context from my screen, and do exactly that. This has been impossible, as far as I can tell, to do for years now.

Trying to find the “right” assistant

At some point, my phone stopped responding to “OK Google”. I still don’t know why it won’t work.

Holding down the Home bar (the home button went the way of the dodo) brings up an assistant-style UI, but it’s dumb as bricks and only Googles the web. Useless.

So, I installed Gemini. I asked it to perform a basic task. It responded “in live mode, I cannot do that”. Asking it how I can get it to create me a calendar event, it could not answer the question. Saying instead to open my calendar app and create a new event. I know how to use a calendar. I want it to justify its existence by providing more value than a Google search. It was ultimately unable to answer the question.

Searching the internet, apparently both of the ways I had been using assistant features were the wrong way to do it. You have to hold down the power button, that’s how to launch the proper one. My internal response was:

No, that’s for the power menu. I don’t want to dedicate it to Assistant.

Well, apparently, that’s the only way to do it now, so there I go sacrificing another convenience turning it on.

Pulling teeth with Gemini

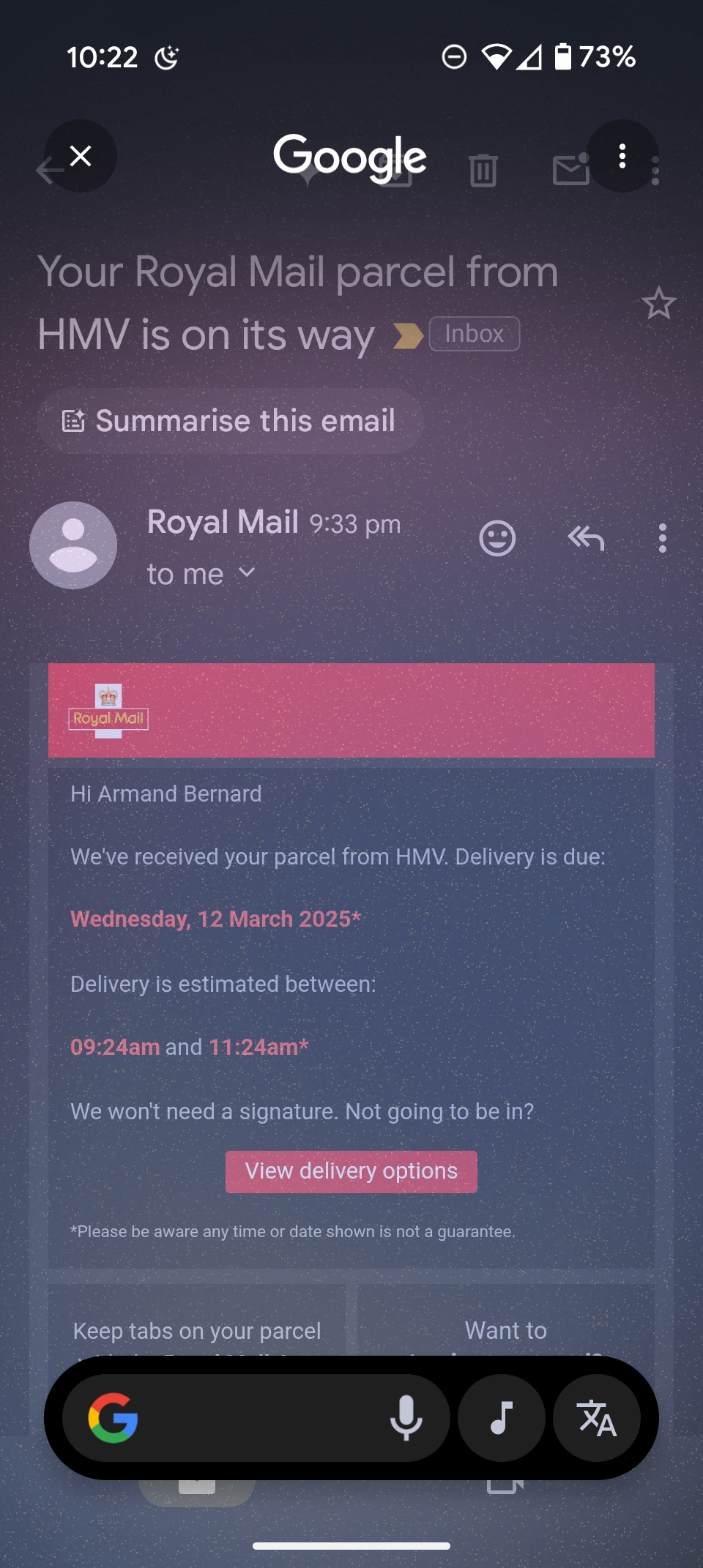

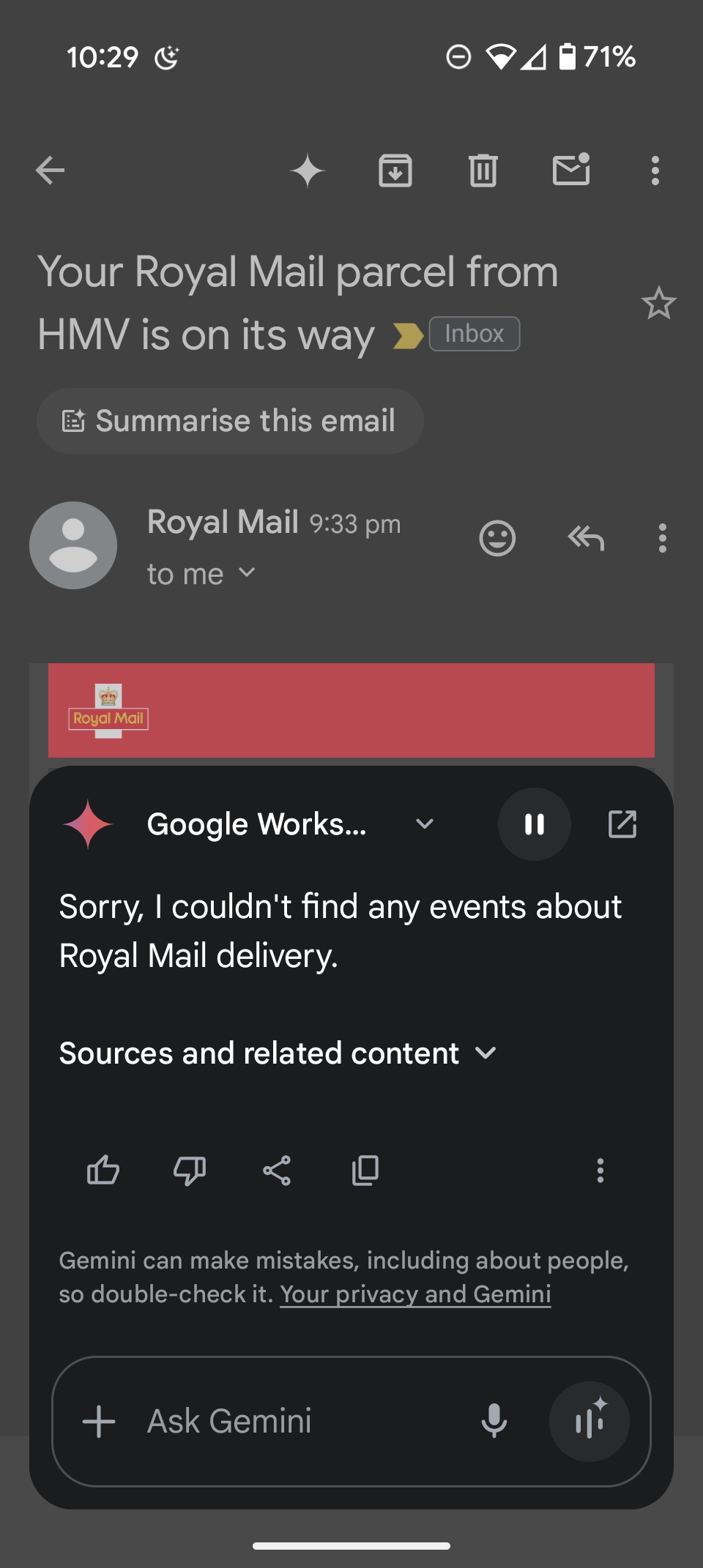

So I ask this power-menu-version of Gemini to do the same simple task. I tried 4 separate times.

First, it created a random event “Meeting with a client” on a completely different day (what?).

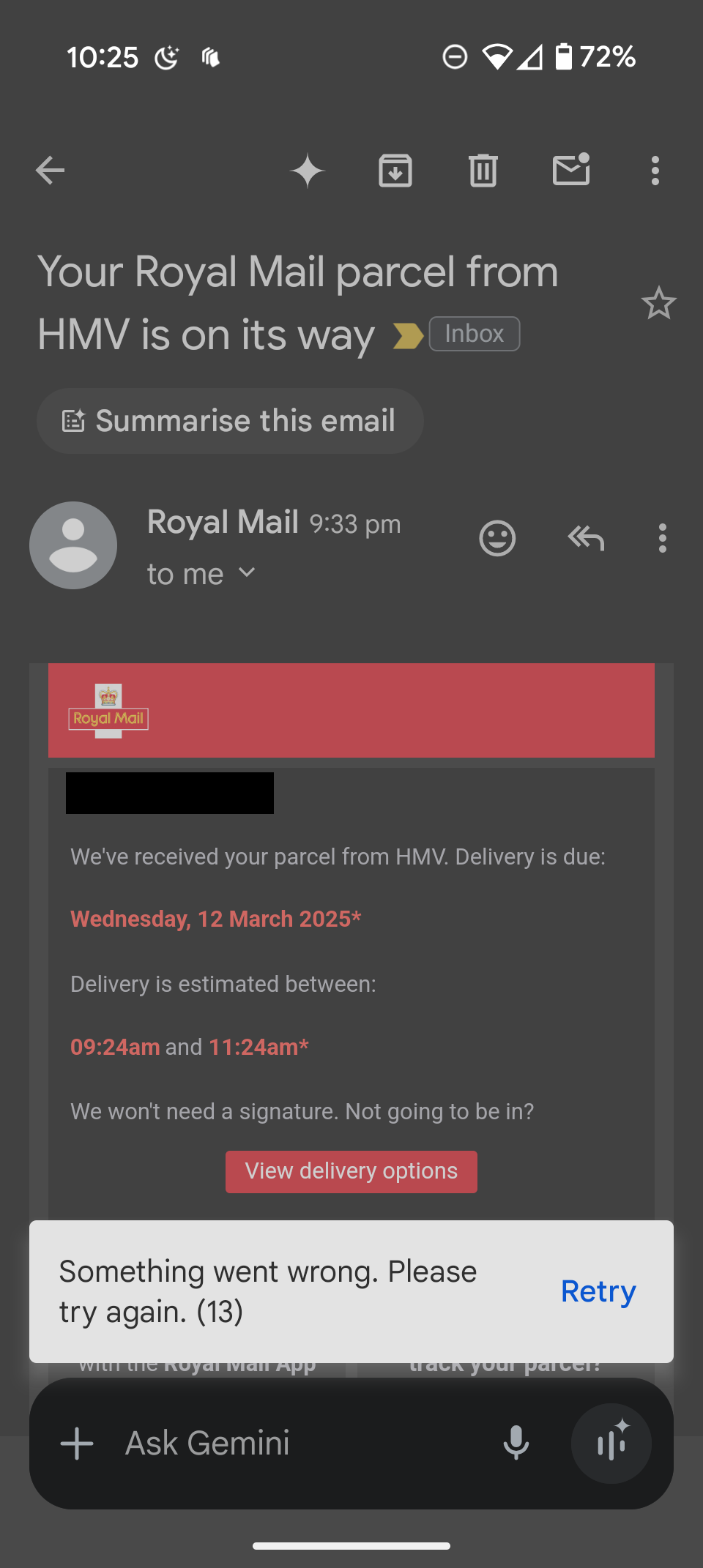

Second time it just crashed with an error.

The third time, it asked me which email to use, giving me a list, but that list did not contain the email I was interested in. I asked it to find the Royal Mail one. No success.

So, quite clearly, it wasn’t using screen content.

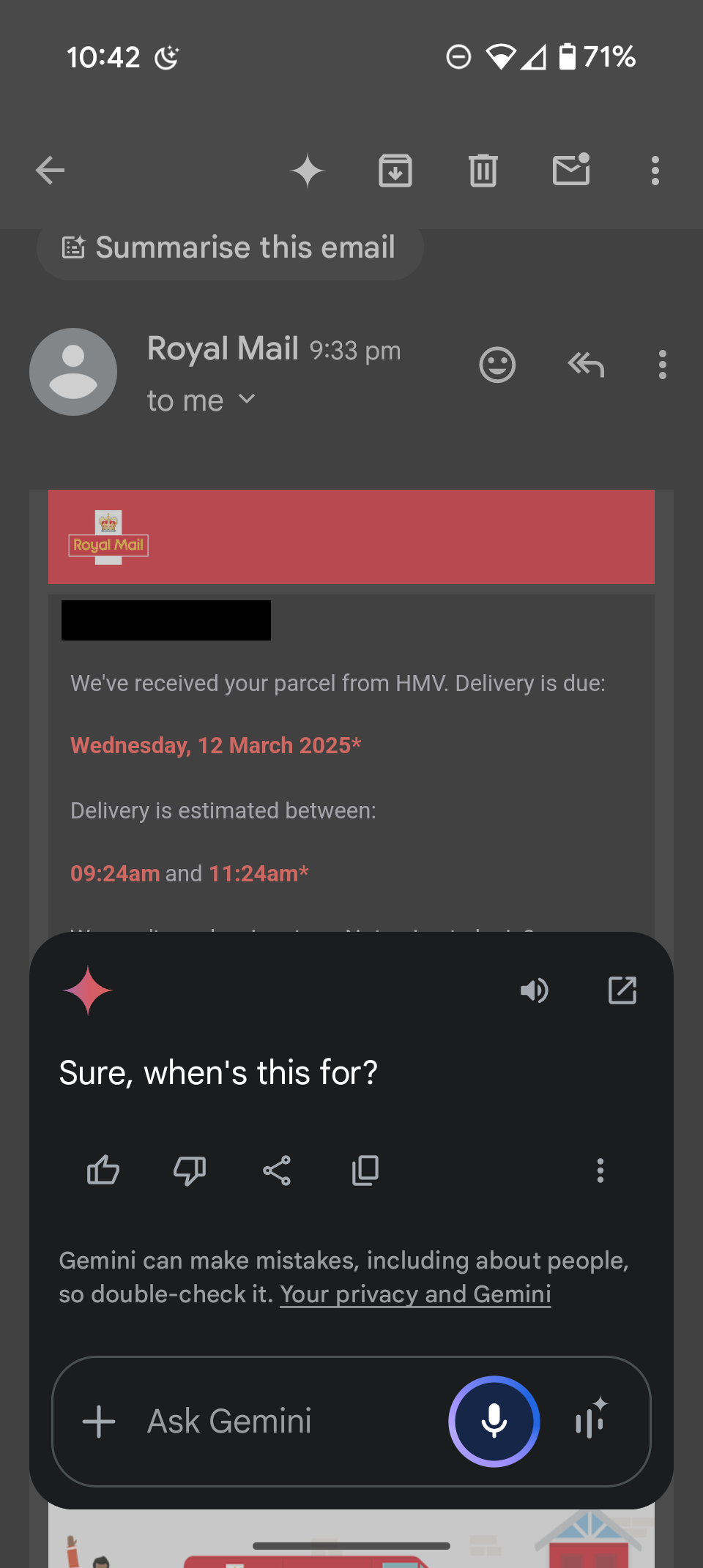

I rephrased the question: “Please create an event from the content on my screen”. It replied “Sure, when’s this for?”

I shouldn’t have to tell you. That’s the point. It’s right there.

Conclusion

There are too many damn assistant versions, and they are all bad. I can’t even imagine what it’s like to also have Bixby in the mix as a Samsung user. (Feel free to let me know below.)

It seems like none of them are able to pull context from what you are doing anymore, and you’ll spend more time fiddling and googling how to make them work than it would take for you to do the task yourself.

In some ways, assistants have gotten worst than almost 10 years ago, despite billions in investments.

As a little bonus, the internet is filled with AI slop that makes finding out real facts, real studies from real people harder than ever.

I write this all mostly to blow off steam, as this stuff has been frustrating me for years now. Let me know what your experience has been like below, I could use some camaraderie.

Others have pointed out plausible reasons specific to Google but they also laid off a fuckton of people and that never really works out long term unless the company has a good reason to lay people off (like if they lose a huge client or find one or a product line fails).

But the recent tech company layoffs seemed pretty arbitrary, especially the stealth layoffs (like the “return to office” demands that just made people with options go elsewhere). I wouldn’t be shocked if the Google Assistant team lost some talented people who either left, were foolishly laid off, or were shifted to Gemini (which, like all consumer generative A.I., is still in beta and hemorrhaging money).

I just say “consumer” there because it seems like highly focused A.I. projects could be legit businesses. Like the protein folding project at Google and things like that. But the chatbots and image generators might never be useful and profitable.

AI is useless at actually helping with normal tasks.

I feel like we were so close to having Jarvis like assistants. Then every company decided to replace the existing tools with their alpha state AI, and suddenly we’re back to square -1.

Yeah pretty much what it feels like to me, everyone wants to cram AI into everything even though it’s often worse and uses far more resources.

AI tools are great at some things, like I had a spreadsheet of product names and SKUs and we wanted to clean up the names by removing extra spaces, use consistent characters like - for a separator, and that kind of thing, and it was very quick to have an AI tool do that for me.

The reason? It’s right there in the title:

Google.

I have no idea about Google, I don’t use it, but Alexa, fuck Alexa is just more and more and more awful. It barely hears you, it does the wrong thing, it seems to just, forget timers. The other day my wife asked “What is the weather on Sunday” and it started rattling off about the weather in San Diego. Like, no, WTF Alexa.

Pure conjecture on my part but I think…

When these first came out, Google approached them in full venture capital mode with the idea of building a market first, then monetizing it. So they threw money and people at it, and it worked fairly well.

They tried making it part of a home automation plaform, but after squandering the good will and market position of acquisitions like Nest and Dropcam, they failed to integrate these products into a coherent platform and needed another approach.

So they turned to media and entertainment only to lose the sonos lawsuit.

After that the product appears to have moved to maintenance mode where people and server resources are constantly being cut, forcing the remaining team to downsize and simply the tech.

Now they are trying to plug it into their AI platform, but in effort to compete with openai and microsoft, they are likely rushing that platform to market far before it is ready.

My most common use for Google assistant was an extremely simple command. “Ok Google, set a timer for ten minutes.” I used this frequently and flawlessly for a long time.

Much like in your situation it just stopped working at some point. Either asking for more info it doesn’t need, or reporting success while not actually doing it. I just gave up trying and haven’t used any voice assistant in a couple of years now.

Now try to add an extra layer of crap with English not being your native language. At this point only zoom gives fairly correct transcripts, both apple and Google are on the “you know what, I’m rather typing this shit” level

This is because hardcoded human algorithms are still better in doing stuff on your phone than AI generated actions.

It seems like they didn’t even test the chatbot in a real live scenario, or trained it specifically to be an assistant on a phone.

They should give it options to trigger stuff, like siri with the workflows. And they should take their time and resources training it. They should give app developers a way to give the AI worker some structured data. The AI should be trained to search for correct context using that API and that it plugs the correct data into the correct workflow.

I bet, they just skipped that individual training of gemini to work as phone assistant.

Apple seems to plan exactly that, and that is way it will be released so late VS the other LLM AI phone assistants. I’m looking forward to see if apple manages to achieve their goal with AI (I will not use it, since I will not buy a new phone for that and I don’t use macOS)

I generally use Google assistant to do about 4 things.

Check the weather, set a timer for X minutes, remind me to do something later today (ie: get the mail at 4) and set an alarm.After ‘upgrading’ to Gemini I tried to get it to set a timer or something and it wanted access to all kinds of information that is irrelevant to setting a goddamn timer.

I promptly disabled it and went back to Google assistant, which does these 4 basic things without prying into my everyday life.

on top of that Gemini burns through a bunch of electricity in a data center somewhere to get the basic things wrong.

It just straight up stopped sending me reminders that I’ve scheduled. Literally the one thing I used it for.

Who cares? Stop using Google products. They are an evil company.

I’ve developed a theory. I think the person who put the “don’t be evil” line in the mission statement for Google put it there with a clever reason. Not simply to say to the company “don’t be evil” but so that we the consumers will know that, on the day they remove the line they will have become truly evil and that we should abandon the company and it’s products with all due haste.

Like a canary page

I think that this is the result of a KPI.

At some point there was a fight inside Google between engineering and marketing about how to proceed with product development. Engineers wanted a better product, marketing wanted more eyeballs.

As a result, search became about “engagement”, or “Moar clicks is betterer”.

Seen in this light, your triggering of the assistant four times increased your use and thus your engagement. An engineer would point out that this is not a valid metric, but Google is now run by the marketing and accounting departments, not by engineers.

Another aspect that I only recently became aware of is that in order to get promoted, you need to make a global impact. This is why shit is changing for no particular reason or benefit and has been for a decade or so.

4 times once every few years is still way lower than using it every few weeks, once, as it works the first time.

I didn’t say that it was a valid KPI 😇

Just so you know, your name’s visible in the “Gemini crashes” image.

Thanks bud

More like the awe has disappeared and just realizing it’s always been bad?

Weird. My Google Pixel 9 Pro XL has 3 navigation buttons.

That’s something that’s customizable using the stock firmware.

Settings -> Display & touch -> Navigation mode.

My Google Assistant still responds to “Hey Google” or “ok Google” just fine as well on my Google phone.